CAVEON SECURITY INSIGHTS BLOG

The World's Only Test Security Blog

Pull up a chair among Caveon's experts in psychometrics, psychology, data science, test security, law, education, and oh-so-many other fields and join in the conversation about all things test security.

Constructing Test Items (Guidelines & 7 Common Item Types)

Posted by Erika Johnson

updated over a week ago

Introduction

Let's say you have been given the task of building an examination for your organization.

Finally (after spending two weeks panicking about how you would do this and definitely not procrastinating the work that must be done), you are finally ready to begin the test development process.

But you can't help but ask yourself:

- Where in the world do you begin?

- Why do you need to create this exam?

- And while you know you need to construct test items, which item types are the best fit for your exam?

- Who is your audience?

- How do you determine all that?

Luckily for you, Caveon has an amazing team of experts on hand to help with every step of the way: Caveon Secure Exam Development (C-SEDs). Whether working with our team or trying your hand at test development yourself, here's some information on item best practices to help guide you on your way.

Table of Contents

- Determine Your Purpose for Testing

- Factors to Consider when Constructing Your Test

- General Guidelines for Constructing Test Items

- Conclusion & More Resources

Determine Your Purpose for Testing: Why and Who

First thing’s first.

Before creating your test, you need to determine your purpose:

- Why you are testing your candidates, and

- Who exactly will be taking your exam

Assessing your testing program's purpose (the "why" and "who" of your exam) is the first vital step of the development process. You do not want to test just to test; you want to scope out the reason for your exam. Ask yourself:

- Why is this exam important to your organization?

- What are you trying to achieve with having your test takers sit for it?

Consider the following:

-

Is your organization interested in testing to see what was learned at the end of a course presented to students?

- Are you looking to assess if an applicant for a job has the necessary knowledge to perform the role?

- Are candidates trying to obtain certification within a certain field?

The Benefits of Identifying Your Exam's Purpose

Learning the purpose of your exam will help you come up with a plan on how best to set up your exam—which exam type to use, which type of exam items will best measure the skills of your candidates (we will discuss this in a minute), etc.

Determining your test's purpose will also help you to be better able to figure out your testing audience, which will ensure your exam is testing your examinees at the right level.

Whether they are students still in school, individuals looking to qualify for a position, or experts looking to get certification in a certain product or field, it’s important to make sure your exam is actually testing at the appropriate level.

For example, your test scores will not be valid if your items are too easy or too hard, so keeping the minimally qualified candidate (MQC) in mind during all of the steps of the exam development process will ensure you are capturing valid test results overall.

What Is the MQC?

MQC is the acronym for “minimally qualified candidate.”

The MQC is a conceptualization of the assessment candidate who possesses the minimum knowledge, skills, experience, and competence to just meet the expectations of a credentialed individual.

If the credential is entry level, the expectations of the MQC will be less than if the credential is designated at an intermediate or expert level.

Think of an ability continuum that goes from low ability to high ability. Somewhere along that ability continuum, a cut point will be set. Those candidates who score below that cut point are not qualified and will fail the test. Those candidates who score above that cut point are qualified and will pass.

The minimally qualified candidate, though, should just barely make the cut. It’s important to focus on the word “qualified,” because even though this candidate will likely gain more expertise over time, they are still deemed to have the requisite knowledge and abilities to perform the job or understand the subject.

Factors to Consider when Constructing Your Test

Now that you’ve determined the purpose of your exam and identified the audience, it’s time to decide on the exam type and which item types to use that will be most appropriate to measure the skills of your test takers.

First up, your exam type.

The type of exam you choose depends on what you are trying to test and the kind of tool you are using to deliver your exam.

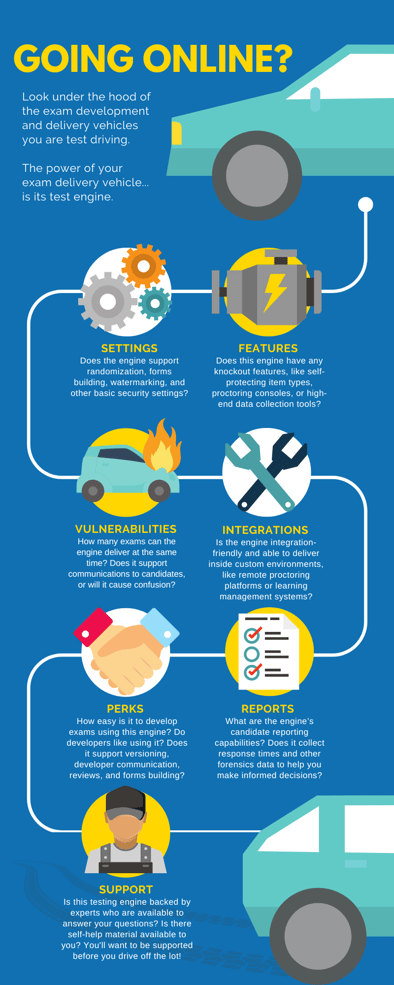

You should always make sure the software you use to develop and deliver your exam is thoroughly vetted—here's an outline of some of the most important things to look for in your testing engine:

Next up, the type of exam and items you choose.

Common Exam Types

The type of exam and type(s) of items you choose depend on your measurement goals and what you are trying to assess. It is essential to take all of this into consideration before moving forward with development.

Here are some common exam types to consider:

Fixed-Form Exam

Fixed-form delivery is a method of testing where every test taker receives the same items. An organization can have more than one fixed-item form in rotation, using the same items that are randomized on each live form. Additionally, forms can be made using a larger item bank and published with a fixed set of items equated to a comparable difficulty and content area match.

Computer Adaptive Testing (CAT)

A CAT exam is a test that adapts to the candidate's ability in real time by selecting different questions from the bank in order to provide a more accurate measurement of their ability level on a common scale. Every time a test taker answers an item, the computer re-estimates the tester’s ability based on all the previous answers and the difficulty of those items. The computer then selects the next item that the test taker should have a 50% chance of answering correctly.

Linear on the Fly Testing (LOFT)

A LOFT exam is a test where the items are drawn from an item bank pool and presented on the exam in a way that each person sees a different set of items. The difficulty of the overall test is controlled to be equal for all examinees. LOFT exams utilize automated item generation (AIG) to create large item banks.

The above three exam types can be used with any standard item type.

Before moving on, however, there is another more innovative exam type to consider if your delivery method allows for it:

Performance-Based Testing

A performance-based assessment measures the test taker's ability to apply the skills and knowledge learned beyond typical methods of study and/or learned through research and experience. For example, a test taker in a medical field may be asked to draw blood from a patient to show they can competently perform the task. Or a test taker wanting to become a chef may be asked to prepare a specific dish to ensure they can execute it properly.

Common Item Types

Once you've decided on the type of exam you'll use, it's time to choose your item types.

There are many different item types to choose from (you can check out a few of our favorites in this article.)

While utilizing more item types on your exam won’t ensure you have more valid test results, it’s important to know what’s available in order to decide on the best item format for your program.

Here are a few of the most common items to consider when constructing your test:

Multiple-Choice

A multiple-choice item is a question where a candidate is asked to select the correct response from a choice of four (or more) options.

Multiple Response

A multiple response item is an item where a candidate is asked to select more than one response from a select pool of options (i.e., “choose two,” “choose 3,” etc.)

Short Answer

Short answer items ask a test taker to synthesize, analyze, and evaluate information, and then to present it coherently in written form.

Matching

A matching item requires test takers to connect a definition/description/scenario to its associated correct keyword or response.

Build List

A build list item challenges a candidate’s ability to identify and order the steps/tasks needed to perform a process or procedure.

Discrete Option Multiple Choice™ (DOMC)

DOMC™ is known as the “multiple-choice item makeover.” Instead of showing all the answer options, DOMC options are randomly presented one at a time. For each option, the test taker chooses “yes” or “no.” When the question is answered correctly or incorrectly, the next question is presented. DOMC has been used by award-winning testing programs to prevent cheating and test theft. You can learn more about the DOMC item type in this white paper.

SmartItem™

A self-protecting item, otherwise known as a SmartItem, employs a proprietary technology resistant to cheating and theft. A SmartItem contains multiple variations, all of which work together to cover an entire learning objective completely. Each time the item is administered, the computer generates a random variation. SmartItem technology has numerous benefits, including curbing item development costs and mitigating the effects of testwiseness. You can learn more about the SmartItem in this infographic and this white paper.

What Are the General Guidelines for Constructing Test Items?

Regardless of the exam type and item types you choose, focusing on some best practice guidelines can set up your exam for success in the long run.

There are many guidelines for creating tests (see this handy guide, for example), but this list sticks to the most important points. Little things can really make a difference when developing a valid and reliable exam!

Institute Fairness

Although you want to ensure that your items are difficult enough that not everyone gets them correct, you never want to trick your test takers! Keeping your wording clear and making sure your questions are direct and not ambiguous is very important. For example, asking a question such as “What is the most important ingredient to include when baking chocolate chip cookies?” does not set your test taker up for success. One person may argue that sugar is the most important, while another test taker may say that the chocolate chips are the most necessary ingredient. A better way to ask this question would be “What is an ingredient found in chocolate chip cookies?” or “Place the following steps in the proper order when baking chocolate chip cookies.”

Stick to the Topic at Hand

When creating your items, ensuring that each item aligns with the objective being tested is very important. If the objective asks the test taker to identify genres of music from the 1990s, and your item is asking the test taker to identify different wind instruments, your item is not aligning with the objective.

Ensure Item Relevancy

Your items should be relevant to the task that you are trying to test. Coming up with ideas to write on can be difficult, but avoid asking your test takers to identify trivial facts about your objective just to find something to write about. If your objective asks the test taker to know the main female characters in the popular TV show Friends, asking the test taker what color Rachel’s skirt was in episode 3 is not an essential fact that anyone would need to recall to fully understand the objective.

Gauge Item Difficulty

As discussed above, remembering your audience when writing your test items can make or break your exam. To put it into perspective, if you are writing a math exam for a fourth-grade class, but you write all of your items on advanced trigonometry, you have clearly not met the difficulty level for the test taker.

Inspect Your Options

When writing your options, keep these points in mind:

-

- Always make sure your correct option is 100% correct, and your incorrect options are 100% incorrect. By using partially correct or partially incorrect options, you will confuse your candidate. Doing this could keep a truly qualified candidate from answering the item correctly.

- Make sure your distractors are plausible. If your correct response logically answers the question being asked, but your distractors are made up or even silly, it will be very easy for any test taker to figure out which option is correct. Thus, your exam will not properly discriminate between qualified and unqualified candidates.

- Try to make your options parallel to one another. Ensuring that your options are all worded similarly and are approximately the same length will keep one from standing out from another, helping to remove that testwiseness effect.

Conclusion

Constructing test items and creating entire examinations is no easy undertaking.

This article will hopefully help you identify your specific purpose for testing and determine the exam and item types you can use to best measure the skills of your test takers.

We’ve also gone over general best practices to consider when constructing items, and we’ve sprinkled helpful resources throughout to help you on your exam development journey.

(Note: This article helps you tackle the first step of the 8-step assessment process: Planning & Developing Test Specifications.)

To learn more about creating your exam—including how to increase the usable lifespan of your exam—review our ultimate guide on secure exam creation and also our workbook on evaluating your testing engine, leveraging secure item types, and increasing the number of items on your tests.

And if you need help constructing your exam and/or items, our award-winning exam development team is here to help!

Erika Johnson

Erika is an Exam Development Manager in Caveon’s C-SEDs group. With almost 20 years in the testing industry, nine of which have been with Caveon, Erika is a veteran of both exam development and test security. Erika has extensive experience working with new, innovative test designs, and she knows how to best keep an exam secure and valid.

View all articlesAbout Caveon

For more than 18 years, Caveon Test Security has driven the discussion and practice of exam security in the testing industry. Today, as the recognized leader in the field, we have expanded our offerings to encompass innovative solutions and technologies that provide comprehensive protection: Solutions designed to detect, deter, and even prevent test fraud.

Posts by Topic

- Test Security Basics (34)

- Detection Measures (29)

- K-12 Education (27)

- Online Exams (21)

- Test Security Plan (21)

- Higher Education (20)

- Prevention Measures (20)

- Test Security Consulting (20)

- Certification (19)

- Exam Development (19)

- Deterrence Measures (15)

- Medical Licensure (15)

- Web Monitoring (12)

- DOMC™ (11)

- Data Forensics (11)

- Investigating Security Incidents (11)

- Test Security Stories (9)

- Security Incident Response Plan (8)

- Monitoring Test Administration (7)

- SmartItem™ (7)

- Automated Item Generation (AIG) (6)

- Braindumps (6)

- Proctoring (4)

- DMCA Letters (2)

Recent Posts

SUBSCRIBE TO OUR NEWSLETTER

Get expert knowledge delivered straight to your inbox, including exclusive access to industry publications and Caveon's subscriber-only resource, The Lockbox.